|

ModErn Text Analysis

META Enumerates Textual Applications

|

|

ModErn Text Analysis

META Enumerates Textual Applications

|

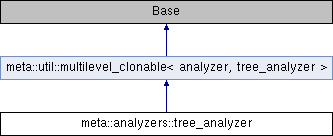

Base class tokenizing using parse tree features. More...

#include <tree_analyzer.h>

Public Member Functions | |

| tree_analyzer (std::unique_ptr< token_stream > stream, const std::string &tagger_prefix, const std::string &parser_prefix) | |

| Creates a tree analyzer. | |

| tree_analyzer (const tree_analyzer &other) | |

| Copy constructor. More... | |

| void | tokenize (corpus::document &doc) override |

| Tokenizes a file into a document. More... | |

| void | add (std::unique_ptr< const tree_featurizer > featurizer) |

| Adds a tree featurizer to the list. | |

Public Member Functions inherited from meta::util::multilevel_clonable< Root, Base, Derived > Public Member Functions inherited from meta::util::multilevel_clonable< Root, Base, Derived > | |

| virtual std::unique_ptr< Root > | clone () const |

| Clones the given object. More... | |

Static Public Attributes | |

| static const std::string | id = "tree" |

| Identifier for this analyzer. | |

Private Attributes | |

| std::shared_ptr< std::vector< std::unique_ptr< const tree_featurizer > > > | featurizers_ |

| A list of tree_featurizers to run on each parse tree. | |

| std::unique_ptr< token_stream > | stream_ |

| The token stream for extracting tokens. | |

| std::shared_ptr< const sequence::perceptron > | tagger_ |

| The tagger used for tagging individual sentences. More... | |

| std::shared_ptr< const parser::sr_parser > | parser_ |

| The parser to parse individual sentences. More... | |

Base class tokenizing using parse tree features.

| meta::analyzers::tree_analyzer::tree_analyzer | ( | const tree_analyzer & | other | ) |

Copy constructor.

| other | The other tree_analyzer to copy from. |

|

override |

Tokenizes a file into a document.

| doc | The document to store the tokenized information in |

|

private |

The tagger used for tagging individual sentences.

This will be shared among all copies of this analyzer (so there will be only one copy of the tagger's model across all of the threads used during tokenization).

|

private |

The parser to parse individual sentences.

This will be shared among all copies of tis analyzer (so there will be one copy of the parser's model across all of the threads used during tokenization).

1.8.9.1

1.8.9.1