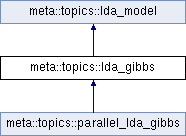

A LDA topic model implemented using a collapsed gibbs sampler.

More...

#include <lda_gibbs.h>

|

| | lda_gibbs (std::shared_ptr< index::forward_index > idx, uint64_t num_topics, double alpha, double beta) |

| | Constructs the lda model over the given documents, with the given number of topics, and hyperparameters \(\alpha\) and \(\beta\) for the priors on \(\phi\) (topic distributions) and \(\theta\) (topic proportions), respectively. More...

|

| |

|

virtual | ~lda_gibbs ()=default |

| | Destructor: virtual for potential subclassing.

|

| |

| virtual void | run (uint64_t num_iters, double convergence=1e-6) |

| | Runs the sampler for a maximum number of iterations, or until the given convergence criterion is met. More...

|

| |

| | lda_model (std::shared_ptr< index::forward_index > idx, uint64_t num_topics) |

| | Constructs an lda_model over the given set of documents and with a fixed number of topics. More...

|

| |

| virtual | ~lda_model ()=default |

| | Destructor. More...

|

| |

| void | save_doc_topic_distributions (const std::string &filename) const |

| | Saves the topic proportions \(\theta_d\) for each document to the given file. More...

|

| |

| void | save_topic_term_distributions (const std::string &filename) const |

| | Saves the term distributions \(\phi_j\) for each topic to the given file. More...

|

| |

| void | save (const std::string &prefix) const |

| | Saves the current model to a set of files beginning with prefix: prefix.phi, prefix.theta, and prefix.terms. More...

|

| |

|

| topic_id | sample_topic (term_id term, doc_id doc) |

| | Samples a topic from the full conditional distribution \(P(z_i = j | w, \boldsymbol{z})\). More...

|

| |

| virtual double | compute_sampling_weight (term_id term, doc_id doc, topic_id topic) const |

| | Computes a weight proportional to \(P(z_i = j | w, \boldsymbol{z})\). More...

|

| |

| virtual double | compute_term_topic_probability (term_id term, topic_id topic) const override |

| |

| virtual double | compute_doc_topic_probability (doc_id doc, topic_id topic) const override |

| |

| virtual void | initialize () |

| | Initializes the first set of topic assignments for inference. More...

|

| |

| virtual void | perform_iteration (uint64_t iter, bool init=false) |

| | Performs a sampling iteration. More...

|

| |

| virtual void | decrease_counts (topic_id topic, term_id term, doc_id doc) |

| | Decreases all counts associated with the given topic, term, and document by one. More...

|

| |

| virtual void | increase_counts (topic_id topic, term_id term, doc_id doc) |

| | Increases all counts associated with the given topic, term, and document by one. More...

|

| |

| double | corpus_log_likelihood () const |

| |

|

lda_gibbs & | operator= (const lda_gibbs &)=delete |

| | lda_gibbs cannot be copy assigned.

|

| |

|

| lda_gibbs (const lda_gibbs &other)=delete |

| | lda_gibbs cannot be copy constructed.

|

| |

|

lda_model & | operator= (const lda_model &)=delete |

| | lda_models cannot be copy assigned.

|

| |

|

| lda_model (const lda_model &)=delete |

| | lda_models cannot be copy constructed.

|

| |

A LDA topic model implemented using a collapsed gibbs sampler.

- See also

- http://www.pnas.org/content/101/suppl.1/5228.full.pdf

| meta::topics::lda_gibbs::lda_gibbs |

( |

std::shared_ptr< index::forward_index > |

idx, |

|

|

uint64_t |

num_topics, |

|

|

double |

alpha, |

|

|

double |

beta |

|

) |

| |

Constructs the lda model over the given documents, with the given number of topics, and hyperparameters \(\alpha\) and \(\beta\) for the priors on \(\phi\) (topic distributions) and \(\theta\) (topic proportions), respectively.

- Parameters

-

| idx | The index that contains the documents to model |

| num_topics | The number of topics to infer |

| alpha | The hyperparameter for the Dirichlet prior over \(\phi\) |

| beta | The hyperparameter for the Dirichlet prior over \(\theta\) |

| void meta::topics::lda_gibbs::run |

( |

uint64_t |

num_iters, |

|

|

double |

convergence = 1e-6 |

|

) |

| |

|

virtual |

Runs the sampler for a maximum number of iterations, or until the given convergence criterion is met.

The convergence criterion is determined as the relative difference in log corpus likelihood between two iterations.

- Parameters

-

| num_iters | The maximum number of iterations to run the sampler for |

| convergence | The lowest relative difference in \(\log P(\mathbf{w} \mid \mathbf{z})\) to be allowed before considering the sampler to have converged |

Implements meta::topics::lda_model.

| topic_id meta::topics::lda_gibbs::sample_topic |

( |

term_id |

term, |

|

|

doc_id |

doc |

|

) |

| |

|

protected |

Samples a topic from the full conditional distribution \(P(z_i = j | w, \boldsymbol{z})\).

Used in both initialization and each normal iteration of the sampler, after removing the current value of \(z_i\) from the vector of assignments \(\boldsymbol{z}\).

- Parameters

-

| term | The term we are sampling a topic assignment for |

| doc | The document the term resides in |

- Returns

- the topic sampled the given (term, doc) pair

| double meta::topics::lda_gibbs::compute_sampling_weight |

( |

term_id |

term, |

|

|

doc_id |

doc, |

|

|

topic_id |

topic |

|

) |

| const |

|

protectedvirtual |

Computes a weight proportional to \(P(z_i = j | w, \boldsymbol{z})\).

- Parameters

-

| term | The current word we are sampling for |

| doc | The document in which the term resides |

| topic | The topic \(j\) we want to compute the probability for |

- Returns

- a weight proportional to the probability that the given term in the given document belongs to the given topic

Reimplemented in meta::topics::parallel_lda_gibbs.

| double meta::topics::lda_gibbs::compute_term_topic_probability |

( |

term_id |

term, |

|

|

topic_id |

topic |

|

) |

| const |

|

overrideprotectedvirtual |

- Returns

- the probability that the given term appears in the given topic

- Parameters

-

| term | The term we are concerned with. |

| topic | The topic we are concerned with. |

Implements meta::topics::lda_model.

| double meta::topics::lda_gibbs::compute_doc_topic_probability |

( |

doc_id |

doc, |

|

|

topic_id |

topic |

|

) |

| const |

|

overrideprotectedvirtual |

- Returns

- the probability that the given topic is picked for the given document

- Parameters

-

| doc | The document we are concerned with. |

| topic | The topic we are concerned with. |

Implements meta::topics::lda_model.

| void meta::topics::lda_gibbs::initialize |

( |

| ) |

|

|

protectedvirtual |

Initializes the first set of topic assignments for inference.

Employs an online application of the sampler where counts are only considered for the words observed so far through the loop.

Reimplemented in meta::topics::parallel_lda_gibbs.

| void meta::topics::lda_gibbs::perform_iteration |

( |

uint64_t |

iter, |

|

|

bool |

init = false |

|

) |

| |

|

protectedvirtual |

Performs a sampling iteration.

- Parameters

-

| iter | The iteration number |

| init | Whether or not to employ the online method (defaults to false) |

Reimplemented in meta::topics::parallel_lda_gibbs.

| void meta::topics::lda_gibbs::decrease_counts |

( |

topic_id |

topic, |

|

|

term_id |

term, |

|

|

doc_id |

doc |

|

) |

| |

|

protectedvirtual |

Decreases all counts associated with the given topic, term, and document by one.

- Parameters

-

| topic | The topic in question |

| term | The term in question |

| doc | The document in question |

Reimplemented in meta::topics::parallel_lda_gibbs.

| void meta::topics::lda_gibbs::increase_counts |

( |

topic_id |

topic, |

|

|

term_id |

term, |

|

|

doc_id |

doc |

|

) |

| |

|

protectedvirtual |

Increases all counts associated with the given topic, term, and document by one.

- Parameters

-

| topic | The topic in question |

| term | The term in question |

| doc | The document in question |

Reimplemented in meta::topics::parallel_lda_gibbs.

| double meta::topics::lda_gibbs::corpus_log_likelihood |

( |

| ) |

const |

|

protected |

- Returns

- \(\log P(\mathbf{w} \mid \mathbf{z})\)

| std::vector<std::vector<topic_id> > meta::topics::lda_gibbs::doc_word_topic_ |

|

protected |

The topic assignment for every word in every document.

Note that the same word occurring multiple times in one document could potentially have many different topics assigned to it, so we are not using term_ids here, but our own contrived intra document term id.

Indexed as [doc_id][position].

The documentation for this class was generated from the following files:

Public Member Functions inherited from meta::topics::lda_model

Public Member Functions inherited from meta::topics::lda_model Protected Member Functions inherited from meta::topics::lda_model

Protected Member Functions inherited from meta::topics::lda_model Protected Attributes inherited from meta::topics::lda_model

Protected Attributes inherited from meta::topics::lda_model 1.8.9.1

1.8.9.1